A man who knows he's being murdered by slow torture seeks to stare his killers in the face before Death overtakes him. Having read this blog, and having seen potential in the video filters now being developed for finding even invisible Death Himself, he wrote this to me yesterday:

|

| A man who has yet to see who and what is killing him seeks to stare Death in the face |

I'll be glad to help you, Timothy; however, the quickest way to get you what you need is to simply port what I have to your camera equipment, which I've essentially done the bulk of already by and through my current choice of technologies for my own camera equipment.

Do you have an iPhone? If so, I'm already able to distribute an app to you that will do what you want it to do— that is to say, to find the source of your problem, even when it doesn't want to be found.

Oh, and concerning your requirements for consideration of human color perception and EMF radiation interference (or thereabouts): You'll be glad to know that I've considered both, extensively. I use or apply image-processing techniques with color model transformations in color spaces that are developed specifically to aid in human perception of color and luminance differences, leveraging the EMF radiation interference caused by the source of your problem to actually find the things that are hidden (i.e., the things that you're looking for—the source of your problem). So, if you're using my filters: the more noise, the better (in other words, grainy pics are no longer a hindrance, they are a help. I"ll be happy to go into more detail anytime).

Your quickest path to what you want is to get an iPhone or a Mac; barring that, your cheapest path is to find an OpenGLSL renderer software package for the computer you do have, as the code I've written will work with that.

I'm glad you're enthusiastic or at least prepared to learn and do a lot work to get my imaging filters to run on your end; however, that's unnecessary, as I'm willing to do the work for you. I'd rather you get whatever job you can to make the money you need to buy an iPhone. It's what's best suited for the task.

I'd suggest you do that right now. Your situation demands—not suggests—that you start putting the solutions available to you to work right now; you do not have time or the luxury to take an educational detour. I can tell by the way you write that you've been injured, how you've been injured, and, based on my experience and observation, how your injuries will progress over time. I also have direct, close and frequent contact with the affiliates of those likely causing your injuries. I know the means by which they are causing them, and their future plans to cause more.

In short, know this: you are running out of time.

An effective and decisive maneuver looks like this: buy that iPhone, and run my app. That's for both me and you, in that if I'm taking time to help you with equipment issues, and the learning of new things, I'm taking away from perfecting my filters and helping everyone else. You'll have to meet me halfway, and that involves buying new and updated equipment.

Let me know what you decide as soon as you can. I am very excited that someone has taken an interest in these filters, and, the welfare of mankind. The sooner you are up and running, the sooner you become an indispensable resource for everyone, instead of just another victim.Some people think, want others to die by demonic torture

Some people think it's okay for others to die this way, basically because they disagree with choices or problems the afflicted may (or may not) have:

|

| Despicable behavior by demented people, unfit for integration in a society on the brink of collapse |

|

| "This is just fucking stupid! Sorry, but it is...[a]bsurdity at a global level, and paranoia beyond that" is how this should have been written |

But, for those interested, a link between meth use and demonic activity is already established, and is discussed or mentioned on this blog in the following posts:

- Demons, their people continue to exploit, cause suffering via open portals

- The Death Wish of a Demoniac: Fighting the demon-allied drug trade

- READER | Husband's meth use catalyst for demonic morphing of human faces

- Demon people fail at attempt to use powers on their own

- Cyberstalker advises pending death, cites reasons for societies' demonic allegiance; reader passes on Jesus' compassion (i.e., charity, forgiveness)

- READER | Michigan woman pens letter to jail, advises abstention from drugs, sex

- VIDEO/PHOTOS | Tranny harbors demon in bosom

- The (tweaker sex) Life of a (bag whore) Demoniac

- READER | Did you bring demonic possession on yourself somehow?

- Cyberstalking defender-of-demons wants a showdown

- Tactics advisory riles long-time cyberstalker

- Crystal meth addict lauds mention of connection between demons and drug

- Crystal methamphetamine use common denominator among demon-led local terror groups

In those posts, you'll not only find a connection between crystal meth and visible demonic activity, but a connection between cowards and the deserving nature of their domination by demons afforded them by their cowardice.

Open-nature of my efforts

Even though I've offered to do all the work for Mr. Trespass, that doesn't mean I won't share what I've done with anyone who asks. To that end, here are a few OpenGL kernel routines that convert from/to RGB color space from/to other color spaces; they are compatible with any per-pixel processing application or software development environment and hardware supporting the OpenGL Shading Language.

CIE 1931 Color Space

The Life of a Demoniac Chroma-Series Digital Media Imaging Filters work within the CIE 1931 Color Spaces, which, according to Wikipedia, "are the first defined quantitative links between a) physical pure colors (i.e., wavelengths) in the electromagnetic visible spectrum and b) physiological perceived colors in human color vision. The mathematical relationships that define these color spaces are essential tools for color management. They allow one to translate different physical responses to visible radiation in color inks, illuminated displays, and recording devices such as digital cameras into a universal human color vision response."

CIE RGB to CIE XYZ. Continuing from Wikipedia: "The CIE XYZ color space encompasses all color sensations that an average person can experience. It serves as a standard reference against which many other color spaces are defined... When judging the relative luminance (brightness) of different colors in well-lit situations, humans tend to perceive light within the green parts of the spectrum as brighter than red or blue light of equal power... The XYZ tristimulus values are thus analogous to, but different to, the LMS cone responses of the human eye."

Accordingly, any and all color space conversions begin here:

kernel vec4 coreImageKernel(sampler image)

{

vec4 pixel = unpremultiply(sample(image, samplerCoord(image)));

float r = pixel.r;

float g = pixel.g;

float b = pixel.b;

r = (r > 0.04045) ? pow(((r + 0.055) / 1.055), 2.4) : r / 12.92;

g = (g > 0.04045) ? pow(((g + 0.055) / 1.055), 2.4) : g / 12.92;

b = (b > 0.04045) ? pow(((b + 0.055) / 1.055), 2.4) : b / 12.92;

r = r * 95.047;

g = g * 100.000;

b = b * 108.883;

float x, y, z;

x = r * 0.4124 + g * 0.3576 + b * 0.1805;

y = r * 0.2126 + g * 0.7152 + b * 0.0722;

z = r * 0.0193 + g * 0.1192 + b * 0.9505;

return premultiply(vec4(x, y, z, pixel.a));

}

CIE XYZ to CIE RGB. And, back again (connecting these two in a line produces the same output as the input, which means they are sound implementations of the RGB-to-XYZ-to-RGB formula):

kernel vec4 coreImageKernel(sampler image)

{

vec4 pixel = unpremultiply(sample(image, samplerCoord(image)));

float x = pixel.r;

float y = pixel.g;

float z = pixel.b;

x = x / 95.047;

y = y / 100.000;

z = z / 108.883;

float r, g, b;

r = x * 3.2406 + y * -1.5372 + z * -0.4986;

g = x * -0.9689 + y * 1.8758 + z * 0.0415;

b = x * 0.0557 + y * -0.2040 + z * 1.0570;

r = (r > 0.0031308) ? (1.055 * pow(r, (1.0 / 2.4))) - 0.055 : 12.92 * r;

g = (g > 0.0031308) ? (1.055 * pow(g, (1.0 / 2.4))) - 0.055 : 12.92 * g;

b = (b > 0.0031308) ? (1.055 * pow(b, (1.0 / 2.4))) - 0.055 : 12.92 * b;

return premultiply(vec4(r, g, b, pixel.a));

}

Whether you are performing linear or local adaptive contrast stretching, conventional histogram equalization (or any variant thereof), wavelet-based contrast enhancement, Retinex or gamma correction, you will always perform them within a color space other than RGB; and, if you know your business, you will always perform them within the 1931 CIE color space (if your audience is human—not that it will always be, though; but, let's start with your needs first).

NOTE | Here is a reference for all of the XYZ (Tristimulus) values, of which my code samples use D65 (Daylight), if you want to change your color settings based on your camera's environment:

Observer

|

2° (CIE 1931)

|

10° (CIE 1964)

| ||||

Illuminant

|

X2

|

Y2

|

Z2

|

X10

|

Y10

|

Z10

|

A (Incandescent)

|

109.850

|

100

|

35.585

|

111.144

|

100

|

35.200

|

C

|

98.074

|

100

|

118.232

|

97.285

|

100

|

116.145

|

D50

|

96.422

|

100

|

82.521

|

96.720

|

100

|

81.427

|

D55

|

95.682

|

100

|

92.149

|

95.799

|

100

|

90.926

|

| D65 (Daylight) |

95.047

|

100

|

108.883

|

94.811

|

100

|

107.304

|

D75

|

94.972

|

100

|

122.638

|

94.416

|

100

|

120.641

|

F2 (Fluorescent)

|

99.187

|

100

|

67.395

|

103.280

|

100

|

69.026

|

F7

|

95.044

|

100

|

108.755

|

95.792

|

100

|

107.687

|

F11

|

100.966

|

100

|

64.370

|

103.866

|

100

|

65.627

|

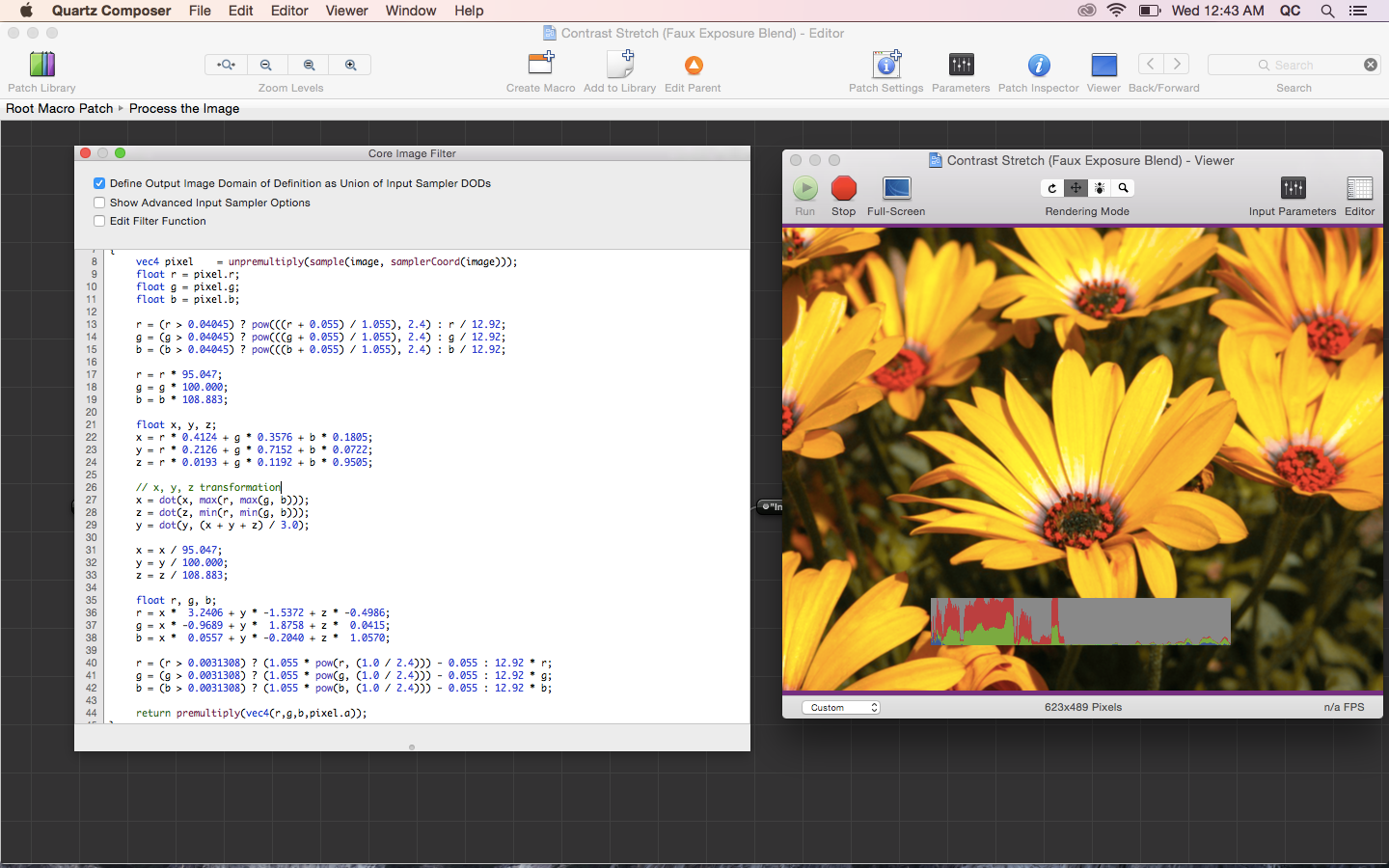

In most cases, there's an extra color conversion step after converting to XYZ, as it is just a launching point into the color spaces in which you'll actually perform your image-processing operations; however, they are occasions when you may find XYZ useful on its own, as I did here while experimenting with custom color stretching procedures:

|

|

| Calculating the dot product between the maximum, minimum and average color components and x, z, and y, respectively, in the XYZ color space yielded richer color than the original | |

kernel vec4 coreImageKernel(sampler image)

{

vec4 pixel = unpremultiply(sample(image, samplerCoord(image)));

float r = pixel.r;

float g = pixel.g;

float b = pixel.b;

r = (r > 0.04045) ? pow(((r + 0.055) / 1.055), 2.4) : r / 12.92;

g = (g > 0.04045) ? pow(((g + 0.055) / 1.055), 2.4) : g / 12.92;

b = (b > 0.04045) ? pow(((b + 0.055) / 1.055), 2.4) : b / 12.92;

r = r * 95.047;

g = g * 100.000;

b = b * 108.883;

float x, y, z;

x = r * 0.4124 + g * 0.3576 + b * 0.1805;

y = r * 0.2126 + g * 0.7152 + b * 0.0722;

z = r * 0.0193 + g * 0.1192 + b * 0.9505;

x = dot(x, max(r, max(g, b)));

z = dot(z, min(r, min(g, b)));

y = dot(y, (x + y + z) / 3.0);

x = x / 95.047;

y = y / 100.000;

z = z / 108.883;

float r, g, b;

r = x * 3.2406 + y * -1.5372 + z * -0.4986;

g = x * -0.9689 + y * 1.8758 + z * 0.0415;

b = x * 0.0557 + y * -0.2040 + z * 1.0570;

r = (r > 0.0031308) ? (1.055 * pow(r, (1.0 / 2.4))) - 0.055 : 12.92 * r;

g = (g > 0.0031308) ? (1.055 * pow(g, (1.0 / 2.4))) - 0.055 : 12.92 * g;

b = (b > 0.0031308) ? (1.055 * pow(b, (1.0 / 2.4))) - 0.055 : 12.92 * b;

return premultiply(vec4(r, g, b, pixel.a));

}

HSV and HSL

Here are a couple of other OpenGL color-conversion formula implementations, specifically, for the HSV and HSL color spaces, and for when simplicity beckons:

RGB to HSL (to RGB). To convert to the hue, saturation and lightness color model from RGB, and then back again:

/*

Hue, saturation, luminance

*/

vec3 RGBToHSL(vec3 color)

{

//Compute min and max component values

float MAX = max(color.r, max(color.g, color.b));

float MIN = min(color.r, min(color.g, color.b));

//Make sure MAX > MIN to avoid division by zero later

MAX = max(MIN + 1e-6, MAX);

//Compute luminosity

float l = (MIN + MAX) / 2.0;

//Compute saturation

float s = (l < 0.5 ? (MAX - MIN) / (MIN + MAX) : (MAX - MIN) / (2.0 - MAX - MIN));

//Compute hue

float h = (MAX == color.r ? (color.g - color.b) / (MAX - MIN) : (MAX == color.g ? 2.0 + (color.b - color.r) / (MAX - MIN) : 4.0 + (color.r - color.g) / (MAX - MIN)));

h /= 6.0;

h = (h < 0.0 ? 1.0 + h : h);

return vec3(h, s, l);

}

float HueToRGB(float f1, float f2, float hue)

{

hue = (hue < 0.0) ? hue + 1.0 : ((hue > 1.0) ? hue - 1.0 : hue);

float res;

res = ((6.0 * hue) < 1.0) ? f1 + (f2 - f1) * 6.0 * hue : (((2.0 * hue) < 1.0) ? f2 : (((3.0 * hue) < 2.0) ? f1 + (f2 - f1) * ((2.0 / 3.0) - hue) * 6.0 : f1));

return res;

}

vec3 HSLToRGB(vec3 hsl)

{

vec3 rgb;

float f2;

f2 = (hsl.z < 0.5) ? hsl.z * (1.0 + hsl.y) : (hsl.z + hsl.y) - (hsl.y * hsl.z);

float f1 = 2.0 * hsl.z - f2;

rgb.r = HueToRGB(f1, f2, hsl.x + (1.0/3.0));

rgb.g = HueToRGB(f1, f2, hsl.x);

rgb.b = HueToRGB(f1, f2, hsl.x - (1.0/3.0));

rgb = (hsl.y == 0.0) ? vec3(hsl.z) : rgb; // Luminance

return rgb;

}

RGB to HSV. To convert from RGB to HSV, perform the same steps as above or saturation and hue; for value, find the maximum value between the r, g and b color components:

float value = max(color.r, max(color.g, color.b));