This is a tip that can be categorized with all the posts on this blog pertaining to Chroma, as well as any other post related to processing digital media intended to decloak the cloaked. Obviously, it's intended for software programmers; but, is also useful to anyone who is not, in that it shows such persons what they need to aim for given what they're facing.

This post also attempts to correct mistakes in other posts, which purport to calculate and employ variance and standard deviation—and which may do so correctly on a local basis—but which do not do so on a global basis. The results of the mistakes were not uninteresting to be sure; but, they misrepresent the meaning and purpose of the measurements, and generally make me look like I've got demons flying in and out of my butt all day, and, accordingly, am not putting as much concentration into my work as is required.

Most image-processing work of import and consequence, e.g., exposure fusion, etc., requires the calculation of an image's global variance and standard deviation.

|

| The formula for calculating standard deviation (or variance, without square root) |

Like the global average (or mean), these two calculations factor into many of the formulas employed by some of the most common image filters, such as the Gaussian function, yet most programmers and researchers and educators are either too lazy and too ignorant to supply anything but an arbitrary value in place of the 𝛔 symbol used in a given formula instead of calculating the true value:

|

| Astoundingly, even the world's leading scholarly publication on exposure fusion suggests an arbitrary value for the standard deviation variable of the Gaussian curve function |

The reason for this inexcusable sloppiness is probably difficulty and the limits of available technology. While Core Image supplies a stock filter for calculating the image mean (the first step in calculating the other two), it does not provide any for either variance or standard deviation. What frustrates and stymies most Core Image programmers in their attempt to calculate these two image measurements is

sigma, which is found in nearly every commonly used formula related to image processing:

∑

That's because there's no stock Core Image filter for summing every pixel in an image, which means that custom coding is required on the part of a programmer, specifically, code that reads each pixel value into an array, and then performs a sum using each. That's not too difficult once you learn how, but no matter how efficient the code or fast the processor, but it slows application performance to a crawl.

NOTE | Somehow, Apple managed to achieve this with its stock mean filter with only a 25%-50% decrease in overall performance; unfortunately, it's a mystery as to how.

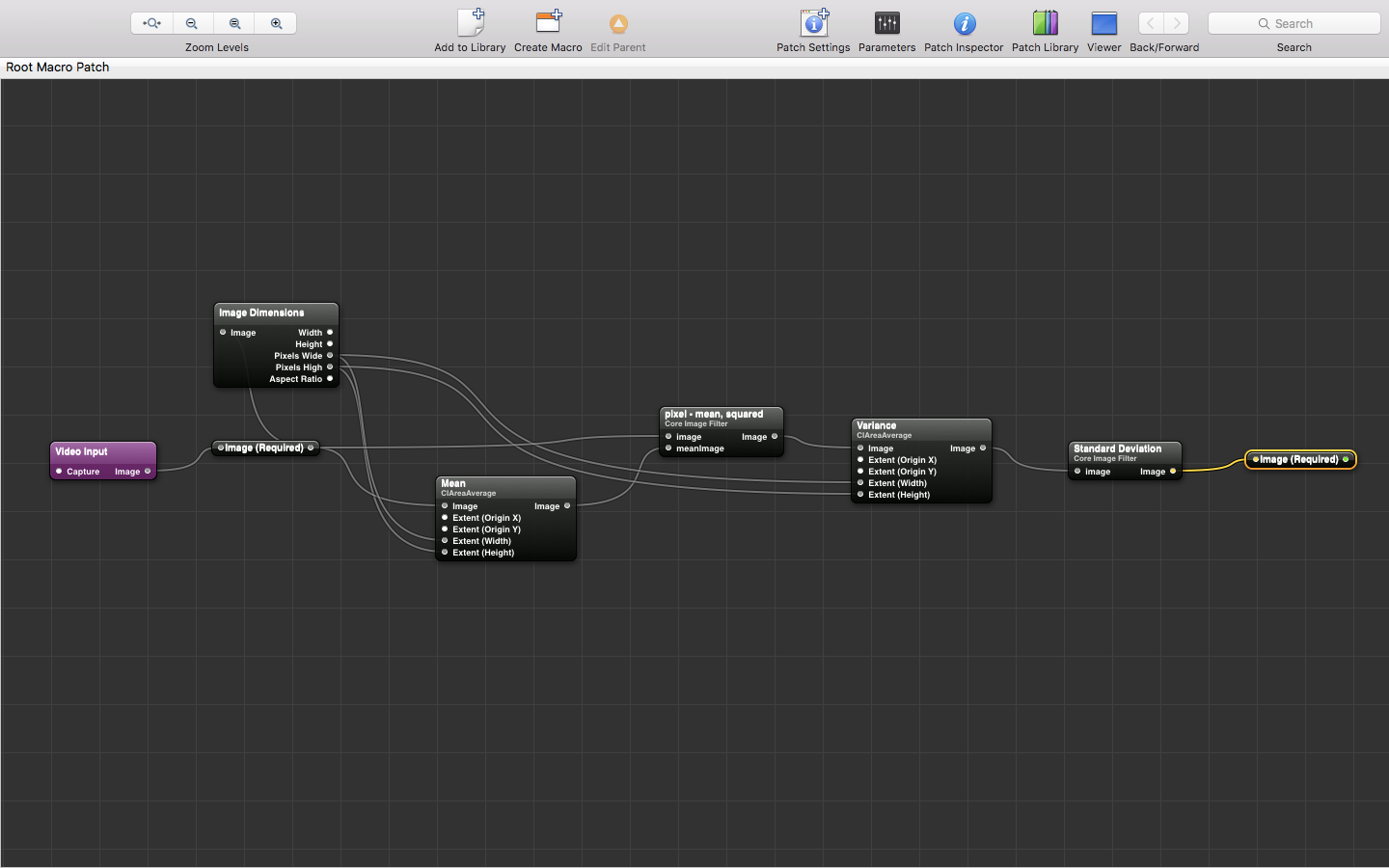

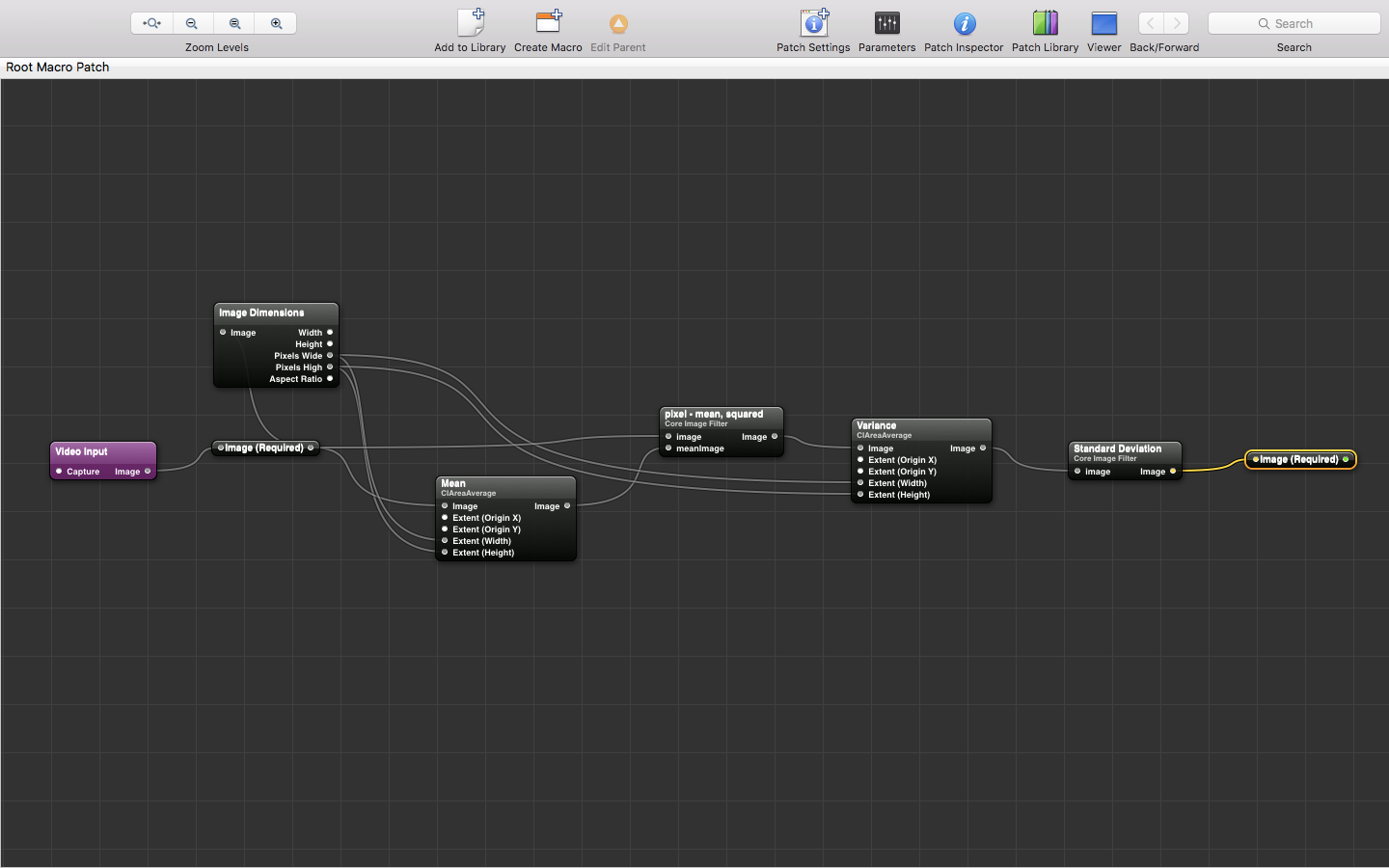

But, with a little creativity and kludging, there is a way to calculate these two measurements without resorting to custom per-pixel summation code. Here's an overview of the image-processing pipeline that obtains these two measurements, illustrated with Quartz Composer (Xcode implementations available upon request):

|

| An overview of the image-processing pipeline for calculating variance and standard deviation with Quartz Composer [download from MediaFire] |

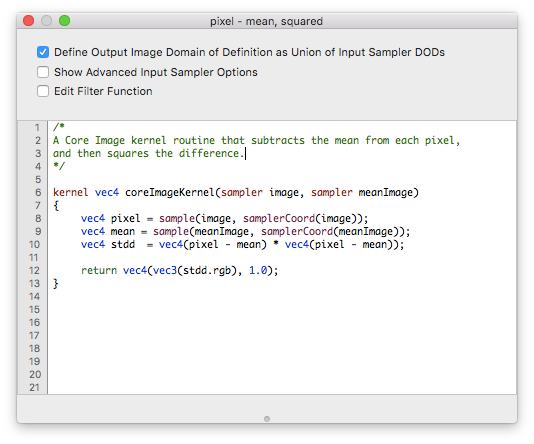

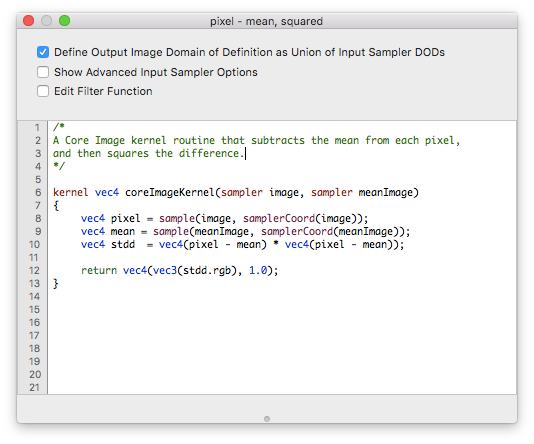

As shown above, the first step is to calculate the image mean, which is easily done using the CIAreaAverage filter provided in Core Image by Apple. The second step is to pass the output of the CIAreaAverage filter and the source image to a custom Core Image filter that subtracts the mean from each pixel in the source image, and then squares the difference:

|

| A custom Core Image filter that subtracts the mean from each pixel in the source image, and then squares the difference |

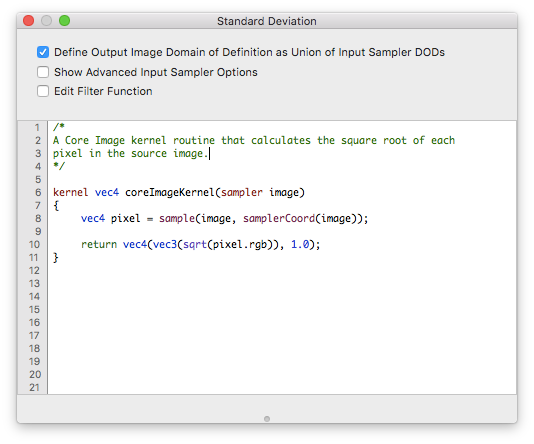

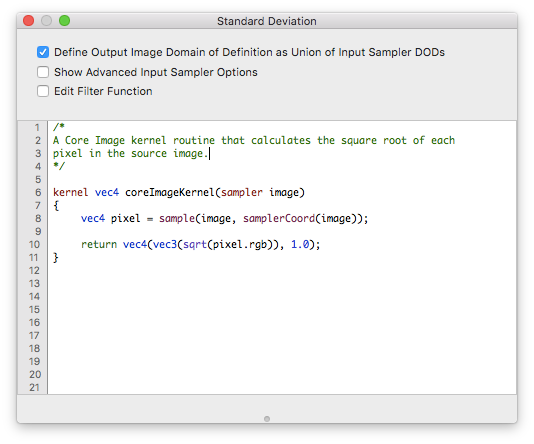

The third step is to calculate the mean of the output of the custom Core Image filter using the CIAreaAverage filter again, which not only sums the value of each pixel in the image, but divides the sum by the number of pixels. To obtain the standard deviation, a second custom Core Image filter calculates the square root of each pixel from the output, which, by the way, is also the variance:

|

| A second custom Core Image filter that calculates the square root of each pixel in the source image, which, in this case, is the variance of the image |

It looks easy and simple; but, based on the plethora of questions on how to do this, which are answered by the most unusable of answers (as found on sites like

stackoverflow.com), it must not be for many.

NOTE | To implement this procedure in an iOS app, you must be using iOS 9, as CISampler is not available in earlier versions; without CISampler, only one image can be passed to a custom Core Image filter (OpenGL ES).