If you ever wonder what I fight about with demons the most these days, believe it or not, it's whether I can do and succeed at stuff like this...

Today, I wrote code that splits a single iPhone video camera feed among multiple displays, each of which can be processed separately and individually and simultaneously, in real-time, without affecting app performing or significant increases to memory and energy consumption using multicast delegation. It displays video as it is acquired by the camera in multiple windows, but with a filter applied at a different setting in each one.

The point of that is to be able to monitor the same scene for different types of demonic activity simultaneously; that would require separate windows, each displaying video processed to reveal and/or enhance visibility of the specific type.

Today, I wrote code that splits a single iPhone video camera feed among multiple displays, each of which can be processed separately and individually and simultaneously, in real-time, without affecting app performing or significant increases to memory and energy consumption using multicast delegation. It displays video as it is acquired by the camera in multiple windows, but with a filter applied at a different setting in each one.

The point of that is to be able to monitor the same scene for different types of demonic activity simultaneously; that would require separate windows, each displaying video processed to reveal and/or enhance visibility of the specific type.

In order to process or filter a video feed in your app, you need the image data. Although you can set up multiple preview layers to display the image feed, the video is going to look the same in each one. That's because the camera supplies the image data required for processing an image (e.g., apply filters, etc.) to one recipient. It doesn't make copies and reroute them to multiple objects in your app.

To do that, you'd have to copy the data, and then redistribute it yourself; but, that means consuming way too much memory and using lot of processing power (and energy) to redistribute (or forward) it. Add the two together, and you end up with performance bottlenecks. On top of that, you'd have to create and maintain separate, custom views. Even without piping video to them, multiple views always means more overhead.

In spite of quadrupling the video feed, and quadrupling image processing tasks, the energy impact was negligible:

Even while processing eight videos feeds individually and in different ways (an exposure adjustment filter was applied separately to each using a different EV setting), the app displayed them at 120 frames per second.

Update on May 23rd, 2017

Even better than that was a Grand Central Dispatch (GCD) variation on the same theme I tried later:

To do that, you'd have to copy the data, and then redistribute it yourself; but, that means consuming way too much memory and using lot of processing power (and energy) to redistribute (or forward) it. Add the two together, and you end up with performance bottlenecks. On top of that, you'd have to create and maintain separate, custom views. Even without piping video to them, multiple views always means more overhead.

|

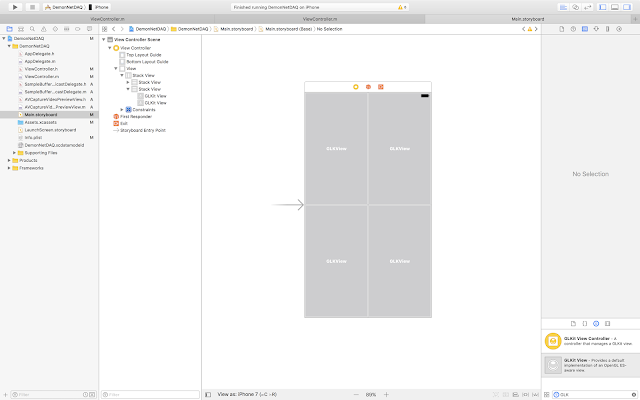

| Four views, but of the same class; no references (outlets) to the views were created. As far as the app is concerned, there is only one view |

Even while processing eight videos feeds individually and in different ways (an exposure adjustment filter was applied separately to each using a different EV setting), the app displayed them at 120 frames per second.

Update on May 23rd, 2017

Even better than that was a Grand Central Dispatch (GCD) variation on the same theme I tried later:

|

| The highlighted area of the source code editor pane (Xcode) is all the code required to do the same thing as the protocol checker/multicast delegation solution shown first in this post |